Introduction

Many cloud providers now offer some form of managed Kubernetes. This is great for anyone wishing to offload the often time-consuming (and error-prone) task of setting up and maintaining a self-hosted cluster. However, even with an array of configuration options, managed cloud offerings can struggle to meet the often specific requirements of a developer or organisation that a custom configured cluster can. Automating the process using Terraform goes a long way to mitigating the concerns a self-managed cluster presents while still retaining the flexibility of one.

What’s the goal of this tutorial?

This tutorial covers the automated deployment of a High Availability K3s cluster on the DigitalOcean (DO) platform using a Terraform module. The module enables a fully functioning (production-ready) K3s cluster up and running in less than 10 minutes.

DigitalOcean as the cloud platform of choice for the module was mostly arbitrary. Like a lot of cloud providers, DigitalOcean offers block storage, managed databases, virtual private cloud networks and dedicated load balancers. These are some of the base components provisioned by the Terraform module in this tutorial. The module itself could be forked to accommodate alternative Terraform cloud providers such as AWS, Google or Azure.

See https://github.com/aigisuk/terraform-digitalocean-ha-k3s for more detailed information about the module and its use.

What is K3s? And why use it instead of Kubernetes?

K3s is a fully conformant production-ready lightweight Kubernetes distribution

K3s is a lightweight distribution of Kubernetes. It bundles many Kubernetes technologies into a single binary. This simplifies the deployment, operation and maintenance of a Kubernetes cluster while still being a fully conformant and secure distribution. These features make K3s a great choice for development (or production) environments, especially in resource-constrained edge deployments.

Prerequisites

- DigitalOcean Cloud Account (Referral Link) & Personal Access Token (with Read/Write permissions)

- An SSH Key pair

- Terraform ≥ v0.13

- Basic knowledge of Kubernetes & Terraform

Step 1 - Clone the Example Repository

Clone the example Terraform configuration repository https://github.com/colinwilson/example-terraform-modules/tree/terraform-digitalocean-ha-k3s

git clone -b terraform-digitalocean-ha-k3s https://github.com/colinwilson/example-terraform-modules

example-terraform-modules/

|-- .gitignore

|-- README.md

|-- main.tf

|-- outputs.tf

|-- terraform.tfvars

`-- variables.tf

Alternatively, manually create the above file structure by copying the following code snippets. (click to expand)

Copy and paste the following code to your main.tf file:

# main.tf

terraform {

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

}

}

}

provider "digitalocean" {

token = var.do_token

}

module "ha-k3s" {

source = "github.com/aigisuk/terraform-digitalocean-ha-k3s"

do_token = var.do_token

ssh_key_fingerprints = var.ssh_key_fingerprints

}

Copy and paste the following code to your outputs.tf file:

# outputs.tf

output "cluster_summary" {

value = module.ha-k3s.cluster_summary

}

Copy and paste the following code to your variables.tf file:

# variables.tf

# Required variables

variable "ssh_key_fingerprints" {}

variable "do_token" {}

Step 2 - Set the Required Input Variables

The default module configuration requires only two inputs. Replace the example values in the terraform.tfvars file with your own DigitalOcean Personal Access Token and SSH Public Key:

# terraform.tfvars (example)

# Your DigitalOcean Personal Access Token (Read & Write)

do_token = "7f5ef8eb151e3c81cd893c6...."

# Your SSH Public Key Fingerprint

ssh_key_fingerprints = ["00:11:22:33:44:55:66:77:88:99:aa:bb:cc:dd:ee:ff"]

Step 3 - Initialize the Terraform Configuration & Provision the K3s Cluster

Switch to your example-terraform-modules directory and initialize your configuration by running terraform init.

terraform init

Terraform will proceed to download the required provider plugins.

Example 'terraform init' OUTPUT. (click to expand)

Initializing modules...

Downloading github.com/aigisuk/terraform-digitalocean-ha-k3s for ha-k3s...

- ha-k3s in .terraform\modules\ha-k3s

Initializing the backend...

Initializing provider plugins...

- Finding latest version of digitalocean/digitalocean...

- Finding latest version of hashicorp/random...

- Installing digitalocean/digitalocean v2.9.0...

- Installed digitalocean/digitalocean v2.9.0 (signed by a HashiCorp partner, key ID F82037E524B9C0E8)

...

Terraform has been successfully initialized!

Now run terraform apply to apply your configuration and deploy the K3s cluster.

terraform apply

Example 'terraform apply' OUTPUT. (click to expand)

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

+ create

Terraform will perform the following actions:

# module.ha-k3s.digitalocean_database_cluster.k3s will be created

+ resource "digitalocean_database_cluster" "k3s" {

+ database = (known after apply)

+ engine = "pg"

+ host = (known after apply)

+ id = (known after apply)

+ name = "k3s-ext-datastore"

+ node_count = 1

+ password = (sensitive value)

+ port = (known after apply)

+ private_host = (known after apply)

+ private_network_uuid = (known after apply)

+ private_uri = (sensitive value)

+ region = "fra1"

+ size = "db-s-1vcpu-1gb"

+ uri = (sensitive value)

+ urn = (known after apply)

+ user = (known after apply)

+ version = "11"

}

# module.ha-k3s.digitalocean_database_firewall.k3s will be created

+ resource "digitalocean_database_firewall" "k3s" {

+ cluster_id = (known after apply)

+ id = (known after apply)

+ rule {

+ created_at = (known after apply)

+ type = "tag"

+ uuid = (known after apply)

+ value = "k3s_server"

}

}

# module.ha-k3s.digitalocean_database_user.dbuser will be created

+ resource "digitalocean_database_user" "dbuser" {

+ cluster_id = (known after apply)

+ id = (known after apply)

+ name = "k3s_default_user"

+ password = (sensitive value)

+ role = (known after apply)

}

# module.ha-k3s.digitalocean_droplet.k3s_agent[0] will be created

+ resource "digitalocean_droplet" "k3s_agent" {

+ backups = false

+ created_at = (known after apply)

+ disk = (known after apply)

+ id = (known after apply)

+ image = "ubuntu-20-04-x64"

+ ipv4_address = (known after apply)

+ ipv4_address_private = (known after apply)

+ ipv6 = false

+ ipv6_address = (known after apply)

+ locked = (known after apply)

+ memory = (known after apply)

+ monitoring = true

+ name = (known after apply)

+ price_hourly = (known after apply)

+ price_monthly = (known after apply)

+ private_networking = true

+ region = "fra1"

+ resize_disk = true

+ size = "s-1vcpu-2gb"

+ ssh_keys = [

+ "00:11:22:33:44:55:66:77:88:99:aa:bb:cc:dd:ee:ff",

]

+ status = (known after apply)

+ tags = [

+ "k3s_agent",

]

+ urn = (known after apply)

+ user_data = (sensitive)

+ vcpus = (known after apply)

+ volume_ids = (known after apply)

+ vpc_uuid = (known after apply)

}

# module.ha-k3s.digitalocean_droplet.k3s_server[0] will be created

+ resource "digitalocean_droplet" "k3s_server" {

+ backups = false

+ created_at = (known after apply)

+ disk = (known after apply)

+ id = (known after apply)

+ image = "ubuntu-20-04-x64"

+ ipv4_address = (known after apply)

+ ipv4_address_private = (known after apply)

+ ipv6 = false

+ ipv6_address = (known after apply)

+ locked = (known after apply)

+ memory = (known after apply)

+ monitoring = true

+ name = (known after apply)

+ price_hourly = (known after apply)

+ price_monthly = (known after apply)

+ private_networking = true

+ region = "fra1"

+ resize_disk = true

+ size = "s-1vcpu-2gb"

+ ssh_keys = [

+ "00:11:22:33:44:55:66:77:88:99:aa:bb:cc:dd:ee:ff",

]

+ status = (known after apply)

+ tags = [

+ "k3s_server",

]

+ urn = (known after apply)

+ user_data = (sensitive)

+ vcpus = (known after apply)

+ volume_ids = (known after apply)

+ vpc_uuid = (known after apply)

}

# module.ha-k3s.digitalocean_droplet.k3s_server_init[0] will be created

+ resource "digitalocean_droplet" "k3s_server_init" {

+ backups = false

+ created_at = (known after apply)

+ disk = (known after apply)

+ id = (known after apply)

+ image = "ubuntu-20-04-x64"

+ ipv4_address = (known after apply)

+ ipv4_address_private = (known after apply)

+ ipv6 = false

+ ipv6_address = (known after apply)

+ locked = (known after apply)

+ memory = (known after apply)

+ monitoring = true

+ name = (known after apply)

+ price_hourly = (known after apply)

+ price_monthly = (known after apply)

+ private_networking = true

+ region = "fra1"

+ resize_disk = true

+ size = "s-1vcpu-2gb"

+ ssh_keys = [

+ "00:11:22:33:44:55:66:77:88:99:aa:bb:cc:dd:ee:ff",

]

+ status = (known after apply)

+ tags = [

+ "k3s_server",

]

+ urn = (known after apply)

+ user_data = (sensitive)

+ vcpus = (known after apply)

+ volume_ids = (known after apply)

+ vpc_uuid = (known after apply)

}

# module.ha-k3s.digitalocean_firewall.ccm_firewall will be created

+ resource "digitalocean_firewall" "ccm_firewall" {

+ created_at = (known after apply)

+ id = (known after apply)

+ name = "ccm-firewall"

+ pending_changes = (known after apply)

+ status = (known after apply)

+ outbound_rule {

+ destination_addresses = [

+ "0.0.0.0/0",

+ "::/0",

]

+ destination_droplet_ids = []

+ destination_load_balancer_uids = []

+ destination_tags = []

+ protocol = "icmp"

}

}

# module.ha-k3s.digitalocean_firewall.k3s_firewall will be created

+ resource "digitalocean_firewall" "k3s_firewall" {

+ created_at = (known after apply)

+ id = (known after apply)

+ name = "k3s-firewall"

+ pending_changes = (known after apply)

+ status = (known after apply)

+ tags = [

+ "k3s_agent",

+ "k3s_server",

]

+ inbound_rule {

+ protocol = "icmp"

+ source_addresses = [

+ "10.10.10.0/24",

]

+ source_droplet_ids = []

+ source_load_balancer_uids = []

+ source_tags = []

}

+ inbound_rule {

+ port_range = "1-65535"

+ protocol = "tcp"

+ source_addresses = [

+ "10.10.10.0/24",

]

+ source_droplet_ids = []

+ source_load_balancer_uids = []

+ source_tags = []

}

+ inbound_rule {

+ port_range = "1-65535"

+ protocol = "udp"

+ source_addresses = [

+ "10.10.10.0/24",

]

+ source_droplet_ids = []

+ source_load_balancer_uids = []

+ source_tags = []

}

+ inbound_rule {

+ port_range = "22"

+ protocol = "tcp"

+ source_addresses = [

+ "0.0.0.0/0",

+ "::/0",

]

+ source_droplet_ids = []

+ source_load_balancer_uids = []

+ source_tags = []

}

+ outbound_rule {

+ destination_addresses = [

+ "0.0.0.0/0",

+ "::/0",

]

+ destination_droplet_ids = []

+ destination_load_balancer_uids = []

+ destination_tags = []

+ port_range = "1-65535"

+ protocol = "tcp"

}

+ outbound_rule {

+ destination_addresses = [

+ "0.0.0.0/0",

+ "::/0",

]

+ destination_droplet_ids = []

+ destination_load_balancer_uids = []

+ destination_tags = []

+ port_range = "1-65535"

+ protocol = "udp"

}

}

# module.ha-k3s.digitalocean_loadbalancer.k3s_lb will be created

+ resource "digitalocean_loadbalancer" "k3s_lb" {

+ algorithm = "round_robin"

+ droplet_ids = (known after apply)

+ droplet_tag = "k3s_server"

+ enable_backend_keepalive = false

+ enable_proxy_protocol = false

+ id = (known after apply)

+ ip = (known after apply)

+ name = "k3s-api-loadbalancer"

+ redirect_http_to_https = false

+ region = "fra1"

+ size = "lb-small"

+ status = (known after apply)

+ urn = (known after apply)

+ vpc_uuid = (known after apply)

+ forwarding_rule {

+ certificate_id = (known after apply)

+ certificate_name = (known after apply)

+ entry_port = 6443

+ entry_protocol = "https"

+ target_port = 6443

+ target_protocol = "https"

+ tls_passthrough = true

}

+ healthcheck {

+ check_interval_seconds = 10

+ healthy_threshold = 5

+ port = 6443

+ protocol = "tcp"

+ response_timeout_seconds = 5

+ unhealthy_threshold = 3

}

+ sticky_sessions {

+ cookie_name = (known after apply)

+ cookie_ttl_seconds = (known after apply)

+ type = (known after apply)

}

}

# module.ha-k3s.digitalocean_project.k3s_cluster will be created

+ resource "digitalocean_project" "k3s_cluster" {

+ created_at = (known after apply)

+ description = "k3s Cluster"

+ environment = "Development"

+ id = (known after apply)

+ is_default = (known after apply)

+ name = "k3s-cluster"

+ owner_id = (known after apply)

+ owner_uuid = (known after apply)

+ purpose = "HA K3s (Kubernetes) Cluster"

+ resources = (known after apply)

+ updated_at = (known after apply)

}

# module.ha-k3s.digitalocean_project_resources.k3s_agent_nodes[0] will be created

+ resource "digitalocean_project_resources" "k3s_agent_nodes" {

+ id = (known after apply)

+ project = (known after apply)

+ resources = (known after apply)

}

# module.ha-k3s.digitalocean_project_resources.k3s_api_server_lb will be created

+ resource "digitalocean_project_resources" "k3s_api_server_lb" {

+ id = (known after apply)

+ project = (known after apply)

+ resources = (known after apply)

}

# module.ha-k3s.digitalocean_project_resources.k3s_ext_datastore will be created

+ resource "digitalocean_project_resources" "k3s_ext_datastore" {

+ id = (known after apply)

+ project = (known after apply)

+ resources = (known after apply)

}

# module.ha-k3s.digitalocean_project_resources.k3s_init_server_node[0] will be created

+ resource "digitalocean_project_resources" "k3s_init_server_node" {

+ id = (known after apply)

+ project = (known after apply)

+ resources = (known after apply)

}

# module.ha-k3s.digitalocean_project_resources.k3s_server_nodes[0] will be created

+ resource "digitalocean_project_resources" "k3s_server_nodes" {

+ id = (known after apply)

+ project = (known after apply)

+ resources = (known after apply)

}

# module.ha-k3s.digitalocean_vpc.k3s_vpc will be created

+ resource "digitalocean_vpc" "k3s_vpc" {

+ created_at = (known after apply)

+ default = (known after apply)

+ id = (known after apply)

+ ip_range = "10.10.10.0/24"

+ name = "k3s-vpc-01"

+ region = "fra1"

+ urn = (known after apply)

}

# module.ha-k3s.random_id.agent_node_id[0] will be created

+ resource "random_id" "agent_node_id" {

+ b64_std = (known after apply)

+ b64_url = (known after apply)

+ byte_length = 2

+ dec = (known after apply)

+ hex = (known after apply)

+ id = (known after apply)

}

# module.ha-k3s.random_id.server_node_id[0] will be created

+ resource "random_id" "server_node_id" {

+ b64_std = (known after apply)

+ b64_url = (known after apply)

+ byte_length = 2

+ dec = (known after apply)

+ hex = (known after apply)

+ id = (known after apply)

}

# module.ha-k3s.random_id.server_node_id[1] will be created

+ resource "random_id" "server_node_id" {

+ b64_std = (known after apply)

+ b64_url = (known after apply)

+ byte_length = 2

+ dec = (known after apply)

+ hex = (known after apply)

+ id = (known after apply)

}

# module.ha-k3s.random_password.k3s_token will be created

+ resource "random_password" "k3s_token" {

+ id = (known after apply)

+ length = 48

+ lower = true

+ min_lower = 0

+ min_numeric = 0

+ min_special = 0

+ min_upper = 0

+ number = true

+ result = (sensitive value)

+ special = false

+ upper = false

}

Plan: 20 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ cluster_summary = {

+ agents = [

+ {

+ id = (known after apply)

+ ip_private = (known after apply)

+ ip_public = (known after apply)

+ name = (known after apply)

+ price = (known after apply)

},

]

+ api_server_ip = (known after apply)

+ cluster_region = "fra1"

+ servers = [

+ {

+ id = (known after apply)

+ ip_private = (known after apply)

+ ip_public = (known after apply)

+ name = (known after apply)

+ price = (known after apply)

},

+ {

+ id = (known after apply)

+ ip_private = (known after apply)

+ ip_public = (known after apply)

+ name = (known after apply)

+ price = (known after apply)

},

]

}

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:

Respond to the prompt with yes to apply the changes and continue.

Terraform will now start provisioning the K3s Cluster resources on DigitalOcean. Once complete (should take under 10 minutes) the command output presents a summary of the cluster components and configuration e.g. IP addresses and node names.

By default, the module provisions 2 server nodes, 1 agent node, a Postgres database to serve as the cluster datastore and a load balancer to proxy API requests to the server nodes.

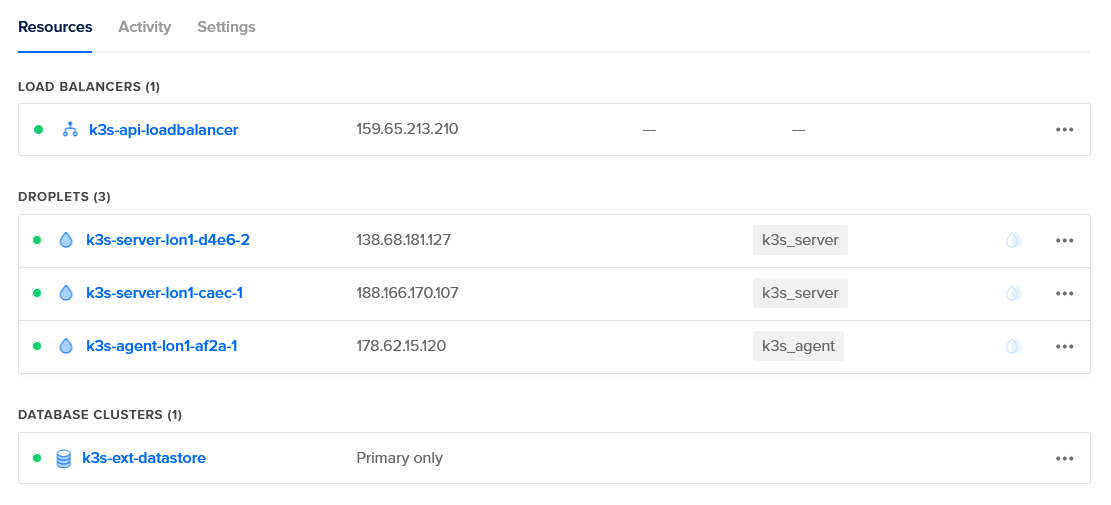

Deployed K3s resources viewed via the DigitalOcean dashboard.

Deployed K3s resources viewed via the DigitalOcean dashboard.

Example configuration OUTPUT. (click to expand)

Apply complete! Resources: 20 added, 0 changed, 0 destroyed.

Outputs:

cluster_summary = {

"agents" = [

{

"id" = "252338674"

"ip_private" = "10.10.10.4"

"ip_public" = "203.0.113.10"

"name" = "k3s-agent-fra1-bc55-1"

"price" = 10

},

]

"api_server_ip" = "198.51.100.10"

"cluster_region" = "fra1"

"servers" = [

{

"id" = "252338926"

"ip_private" = "10.10.10.5"

"ip_public" = "203.0.113.11"

"name" = "k3s-server-fra1-7119-1"

"price" = 10

},

{

"id" = "252338968"

"ip_private" = "10.10.10.6"

"ip_public" = "203.0.113.11"

"name" = "k3s-server-fra1-12cf-2"

"price" = 10

},

]

}

This info is needed for direct access to the cluster nodes and is useful if you’re provisioning the cluster in conjunction with existing resources or as inputs for dependant modules in a configuration.

Step 4 - Accessing the Cluster Externally with kubectl

Now the cluster is setup you’ll most likely want to manage it using kubectl from outside. This can be achieved by copying the clusters kubeconfig to a local machine with kubectl installed.

SSH into one of the provisioned server nodes via its corresponding public IP address (from the configuration output). Copy the /etc/rancher/k3s/k3s.yaml file and save it to the kubeconfig location (~/.kube/config) on your local machine. Alternatively use the scp (secure copy) command to copy the config to your local machine.

sudo scp -i .ssh/your_private_key root@203.0.113.11:/etc/rancher/k3s/k3s.yaml ~/.kube/config

Replace 127.0.0.1 with the public IP address of the provisioned API Proxy Load Balancer (this is the value for the api_server_ip key from the cluster_summary output, i.e. 198.51.100.10 using the example output above).

sudo sed -i -e "s/127.0.0.1/198.51.100.10/g" ~/.kube/config

You can now use kubectl to check the cluster is functioning normally. Run kubectl get nodes to view the status and information of the cluster nodes:

> kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3s-server-fra1-12cf-2 Ready control-plane,master 88s v1.21.1+k3s1

k3s-server-fra1-7119-1 Ready control-plane,master 112s v1.21.1+k3s1

k3s-agent-fra1-bc55-1 Ready <none> 70s v1.21.1+k3s1

Status Ready indicates the cluster nodes are healthy and ready for application deployments (pods).

Step 5. Clean up

You can destroy the cluster by running terraform destroy. Respond to the prompt with yes and Terraform will destroy all the resources provisioned during the terraform apply process.

N.B. Additional billable DO resources provisioned by way of deployed cluster applications are not managed by Terraform and will therefore persist even after applying

terraform destroy. Failing to destroy resources provisioned outside the module could result in charges from DigitalOcean.

terraform destroy

Example 'terraform destroy' OUTPUT. (click to expand)

module.ha-k3s.digitalocean_firewall.k3s_firewall: Refreshing state... [id=4a295ed0-0a37-4040-9f75-45d283b4bdec]

module.ha-k3s.digitalocean_database_cluster.k3s: Refreshing state... [id=84589f4c-e5a5-4f75-a0b9-c2f44f216dcd]

module.ha-k3s.digitalocean_loadbalancer.k3s_lb: Refreshing state... [id=da13bb03-c3db-409a-abe4-ee1d42f91fe9]

...

module.ha-k3s.digitalocean_firewall.ccm_firewall: Destruction complete after 1s

module.ha-k3s.digitalocean_loadbalancer.k3s_lb: Destruction complete after 1s

module.ha-k3s.digitalocean_database_cluster.k3s: Destroying... [id=84589f4c-e5a5-4f75-a0b9-c2f44f216dcd]

...

Destroy complete! Resources: 20 destroyed.

Summary

Throughout this guide, you configured and provisioned a K3s cluster on the DigitalOcean platform using a Terraform module. Then copied its kubeconfig to a local machine for access and management and checked it was functioning properly using kubectl.

The additional features and options the module in this tutorial provides are extensive and will be covered in more detail in future articles.